A Rust FFI adventure in unsafety

Recommended reading: ownership, unsafe Rust

Introduction🔗

This blog post covers my adventure in fixing a bug in the Rust bindings for the Capstone C library, a disassembly library that supports several CPU architectures. The capstone-rs crate attempts to provide a Rusty, object-oriented interface.

You do not necessarily need previous experience in C code or foreign function (FFI) bindings to understand this blog post.

I will cover some of the steps I used to debug this problem. Hopefully, readers can learn from my mistakes.

Some code snippets have been simplified.

Capstone intro🔗

In order to make it easier to understand the discussion, I'll give a brief overview of Capstone and capstone-rs.

Capstone interprets an array of bytes as an array of instructions, providing information such as the address of the instruction, which registers are written, and information about the operands.

Capstone does not parse executable file formats such as ELF, Mach-O, or PE.

Architecture🔗

The code we are discussing is broken into three parts (from lowest level to highest level):

- Capstone: base C library that provides functionality (I am not a maintainer of this library)

- capstone-sys: low-level Rust bindings (contains unsafe

externalfunction definitions and types)- Contains a captive version of the Capstone C library that is built with the Rust code

- Talks directly to the C library

- Exposes Rust types that correspond directly to the C types

- capstone-rs: high-level Rust bindings (contains a safe, "Rustic" object-oriented interface)

- Talks directly to capstone-sys

When I refer to "Capstone" or "the C code", I am referring to the base C library. When I refer to "the Rust code", I usually mean capstone-rs (unless otherwise specified).

Capstone examples🔗

The general workflow for Capstone C interface is:

- Get a Capstone handle

- Disassemble instructions in a buffer

- Close handle, freeing resources

Both example programs below disassemble an array of X86_64 instructions. The programs output the following:

count = 4

0x1000: push rbp

0x1001: mov rax, qword ptr [rip + 0x13b8]

0x1008: jmp 0x8ae21

0x100d: xor r12d, r12d

C example:

#include <stdio.h>

#include <inttypes.h>

#include <capstone/capstone.h>

#define X86_CODE \

"\x55\x48\x8b\x05\xb8\x13\x00\x00\xe9\x14\x9e\x08\x00\x45\x31\xe4"

int main(void) {

csh handle;

cs_insn *insn;

size_t count;

int rc = 0;

// Get handle to Capstone library

if (cs_open(CS_ARCH_X86, CS_MODE_64, &handle) != CS_ERR_OK) {

return 1;

}

// turn ON extra detail

cs_option(handle, CS_OPT_DETAIL, CS_OPT_ON);

// disassemble instructions

count = cs_disasm(

handle, // Capstone handle

(const uint8_t *) X86_CODE, // byte array to disassemble

sizeof(X86_CODE)-1, // length of byte array

0x1000,

0, // disassemble as many instructions as possible

&insn // reference to output

);

if (count > 0) {

printf("count = %zu\n", count);

for (size_t i = 0; i < count; i++) {

printf("0x%"PRIx64": %-6s %s\n",

insn[i].address,

insn[i].mnemonic,

insn[i].op_str);

}

cs_free(insn, count);

} else {

puts("ERROR: Failed to disassemble given code!\n");

rc = 1;

}

cs_close(&handle);

return rc;

}

Rust example:

extern crate capstone;

use capstone::prelude::*;

const X86_CODE: &'static [u8] =

b"\x55\x48\x8b\x05\xb8\x13\x00\x00\xe9\x14\x9e\x08\x00\x45\x31\xe4";

fn main() {

let mut cs = Capstone::new() // Call builder-pattern

.x86() // X86 architecture

.mode(arch::x86::ArchMode::Mode64) // 64-bit mode

.detail(true) // Generate extra instruction details

.build()

.expect("Failed to create Capstone object");

// Get disassembled instructions

let insns = cs.disasm_all(X86_CODE, 0x1000)

.expect("Failed to disassemble");

println!("count = {}", insns.len());

for insn in insns.iter() {

println!(

"0x{:x}: {:6} {}",

insn.address(),

insn.mnemonic().unwrap_or(""),

insn.op_str().unwrap_or("")

);

}

// No explicit free() needed

// Capstone::drop() calls cs_close()

}

Initial analysis🔗

A pull-request first alerted to me that cargo test failed sometimes on Mac OS.

An example failure:

$ cargo test

...

test test::test_instruction_group_ids ... FAILED

...

failures:

---- test::test_instruction_group_ids stdout ----

thread 'test::test_instruction_group_ids' panicked at 'Expected groups {1} does NOT match computed insn groups {110} with ', src/lib.rs:287:8

note: Run with `RUST_BACKTRACE=1` for a backtrace.

I was unable to reproduce the bug on my GNU/Linux development machine (even after a thousand iterations of cargo test).

I needed a Mac to reproduce the bug.

I first reproduced the bug with my brother-in-law (he provided the Mac computer). This was indeed the worst type of bug; the bug only showed up sometimes.

Hunt for the data race🔗

One case where tests fail only sometimes is a race condition. One type of race condition is a data race.

Data races require:

- More than one thread accessing the same memory location at the same time

- At least one of the threads is writing to the memory location

- At least one of the threads is not correctly "synchronizing" around the memory access

I started by reading over the capstone-rs Rust code but did not find a problem. capstone-rs does not spawn threads.

Then, I decided to look over the Capstone C source code. Capstone was not spawning threads, either.

I realized that the cargo test harness spawns multiple threads (to parallelize tests).

But how could threads access the same memory location?

The only way left to share memory was through global variables. My brother-in-law and I searched the Capstone C code for global variable writes.

We saw that four separate functions called the archs_enable() function.

For example:

cs_err CAPSTONE_API cs_open(cs_arch arch, cs_mode mode, csh *handle)

{

/* Do some checks */

archs_enable();

/* ... */

}

We looked at the body of archs_enable().

The static variable initialized is essentially a global variable (but only "visible" from within the archs_enable() function).

static void archs_enable(void)

{

/* "static" makes this a global variable */

static bool initialized = false;

if (initialized)

return;

ARM_enable();

/* Initialize other architectures */

initialized = true;

}

For each architecture, archs_enable() calls a *_enable() enable function.

We peered inside ARM_enable():

void ARM_enable(void)

{

cs_arch_init[CS_ARCH_ARM] = init;

cs_arch_option[CS_ARCH_ARM] = option;

cs_arch_destroy[CS_ARCH_ARM] = destroy;

cs_arch_disallowed_mode_mask[CS_ARCH_ARM] = ~(CS_MODE_LITTLE_ENDIAN |

CS_MODE_ARM | CS_MODE_V8 | CS_MODE_MCLASS | CS_MODE_THUMB |

CS_MODE_BIG_ENDIAN);

// support this arch

all_arch |= (1 << CS_ARCH_ARM);

}

The arrays cs_arch_init, cs_arch_option, cs_arch_destroy, and cs_arch_disallowed_mode_mask are global!

if (initialized)

return;

/* Initialize global arrays */

initialized = true;

The initialzed global variable was read and modified without a lock!

This was a potential data race.

Safe Rust code guarantees the absence of data races, but the C language makes no such guarantee.

If multiple threads entered archs_enabled() simultaneously, initialized would be false.

Each thread would attempt to initialize the global variables simultaneously.

Afterward, each thread would set initialzed to false.

Since each thread would be attempting to initialize the arrays with the same values (they are constant),

you may be tempted to think that this construction is safe.

However, due to complications such as out of order execution,

a memory barrier is also required.

Otherwise, some CPU cores could "see" that initialized is true but not yet "see" that global arrays are initialized the correct values.

Note: I have since eliminated the upstream data race with a pull-request.

Fix Attempt 1🔗

I first tried to work around this bug from within the Rust code.

My brother-in-law and I investigated the primitives in the std::sync module that could help.

We wanted a primitive that would guarantee that initialization of the global variables would happen exactly once.

To quote the std::sync::Once documentation:

A synchronization primitive which can be used to run a one-time global initialization. Useful for one-time initialization for FFI or related functionality.

This fit our use case perfectly!

However, because archs_enable() was declared as static, the symbol is not "visible" to consumers of library (such as capstone-sys or capstone-rs).

static declarations in C are similar to non-pub declarations in Rust.

In order to work around this issue, we called cs_version() (which calls archs_enable()).

Of the exported Capstone functions that called archs_enable(), cs_version() seemed to have the least overhead.

We defined a Rust function init_global_state() that peforms the initialization.

Since the cs_version() is a C function, we had to use an unsafe block to call the function.

static INIT: Once = ONCE_INIT;

fn init_global_state() {

INIT.call_once(|| {

let mut a = 0;

let mut b = 0;

unsafe { cs_version(&mut a, &mut b) };

});

}

Next, we needed to call init_global_state() before calling a C Capstone function.

That way, we would initialized globals in archs_enable() initialization in a thread-safe way.

In the first pass with my brother-in-law, we inserted calls to init_global_state() at the beginning of any Rust function that called a Capstone C function.

The initial work resulted in a work-in-progress pull-request.

Fine-tuning global initialization🔗

I knew that the global initialization we used was sub-optimal. While it would be safe to add extra synchronization, we would cause unnecessary slowdown in the capstone-rs Rust library. I decided to reason about the problem.

Requirement:

Before the first call to a C function that writes to the global arrays, we must call init_global_state() to initialize safely.

I realized that you can partition Rust functions into two groups:

- impl methods:

functions that are in an

implblock AND take aselfparameter. - bare functions:

all other functions.

These functions are either:

- in an

implblock but do not take aselfparameter - outside of an

implblock

- in an

If we call init_global_state() when we construct a new instance of a type (struct or enum),

then we do not need to call init_global_state() in any of the impl methods of that type.

For example, if we call init_global_state() when we create a Capstone instance,

then we do not need to call init_global_state() in Capstone::disasm_all() (which takes a self parameter).

For these example, assume that MyType is a struct with private fields:

- Library users cannot instantiate the type "manually" by writing:

/* Not allowed since MyType has private fields */ let myvar = MyType { /* ... */ }; - Let's name any function that returns an instance of a type a "constructor". For example:

impl MyType { fn new() -> MyType { /* ... */ } } - Then, as long as all constructors call

init_global_state(), then impl methods do not need to callinit_global_state(). Any time an impl method would be called, aselfparameter is required (by definition of impl method). Thisselfinstance would have been created by a constructor that already calledinit_global_state().impl MyType { fn my_method(&self) { /* The self parameter should have been created by a constructor */ /* (that should have initialized the globals) */ } }

I formalized these claims in a comment:

/// Initialize global Capstone state in C library

///

/// # CLAIMS

///

/// 1. Any function *F* (including methods) that calls a `capstone-sys` function *G* where *G*

/// potentially mutates global state must ensure `init_global_state()` is called first.

/// 2. Let *T* be a `struct`/`enum` with at least one non-public field and methods *M* defined. If

/// all constructors *C* (functions that return type *C*) for *T* call `init_global_state()`,

/// then methods *M* that take a `self` parameter do not need to call `init_global_state()`.

///

/// Any `self` instance should have been been created by a constructor *C* that already called

/// `init_global_state()` because *T* has non-public fields. Hence, consumers of the library

/// cannot construct a *T* manually.

Using this reasoning, I was able to remove several calls to init_global_state(), elminating unnecessary synchronization.

Fix status🔗

After adding the thread-safe initialization code, cargo test would still fail sometimes!

I needed to keep looking...

First attempt at using tools🔗

My first attempt at using tools to help find the bug was not successful. Since I thought my bug was a threading issue, I tried Clang's ThreadSanitizer feature and Valgrind's Helgrind and DRD plugins. Each of the tools only reported false positives.

For example, DRD had output:

...

==14815== Conflicting load by thread 1 at 0x0661782c size 2

==14815== at 0x4C46A1F: memmove (in /usr/lib/valgrind/vgpreload_drd-amd64-linux.so)

==14815== by 0x2D756F: copy_from_slice<u8> (mod.rs:712)

==14815== by 0x2D756F: copy_from_slice<u8> (slice.rs:1693)

==14815== by 0x2D756F: spec_extend<u8> (vec.rs:1886)

==14815== by 0x2D756F: extend_from_slice<u8> (vec.rs:1369)

==14815== by 0x2D756F: write (impls.rs:263)

==14815== by 0x2D756F: <std::io::buffered::BufWriter<W> as std::io::Write>::write (buffered.rs:583)

==14815== by 0x2D77C4: <std::io::buffered::LineWriter<W> as std::io::Write>::write (buffered.rs:895)

==14815== by 0x2D9EBE: write (stdio.rs:465)

==14815== by 0x2D9EBE: <std::io::stdio::Stdout as std::io::Write>::write (stdio.rs:450)

==14815== by 0x21127D: <term::terminfo::TerminfoTerminal<T> as std::io::Write>::write (mod.rs:271)

==14815== by 0x1E3AE4: write<Terminal> (impls.rs:118)

==14815== by 0x1E3AE4: write<std::io::stdio::Stdout> (lib.rs:655)

==14815== by 0x1E3AE4: std::io::Write::write_all (mod.rs:1098)

==14815== by 0x1F1E5A: write_plain<std::io::stdio::Stdout,&alloc::string::String> (pretty.rs:93)

==14815== by 0x1F1E5A: <test::formatters::pretty::PrettyFormatter<T>>::write_test_name (pretty.rs:151)

==14815== by 0x1F217E: <test::formatters::pretty::PrettyFormatter<T> as test::formatters::OutputFormatter>::write_result (pretty.rs:177)

==14815== by 0x1EC321: callback (lib.rs:856)

==14815== by 0x1EC321: test::run_tests_console::{{closure}} (lib.rs:924)

==14815== by 0x1EA9F8: run_tests<closure> (lib.rs:1145)

==14815== by 0x1EA9F8: test::run_tests_console (lib.rs:924)

==14815== by 0x1E6156: test::test_main (lib.rs:286)

==14815== by 0x1E6388: test::test_main_static (lib.rs:320)

...

The stacktraces did not include code that I wrote, only code in the Rust test runner.

&mut self vs. &self🔗

Through compile-time borrow checks, the Rust compiler ensures that there can only be up to one mutable reference OR any number of immutable references to a given variable.

Perhaps I was exposing a non-mut reference where I should be exposing a mut reference?

As the bindings author, I am responsible for describing to the Rust compiler how the borrowing and lifetimes work in the underlying C library.

I decided to check my assumptions where I only took a mutable reference.

In the C code for cs_disasm():

size_t CAPSTONE_API cs_disasm(

csh ud, const uint8_t *buffer, size_t size,

uint64_t offset, size_t count, cs_insn **insn)

{

/* Declare more local variables */

struct cs_struct *handle = (struct cs_struct *)(uintptr_t) ud;

/* Do some checks */

if (handle->arch == CS_ARCH_ARM)

handle->ITBlock.size = 0;

/* Do more stuff */

}

If the architecture is ARM, then cs_disasm() mutates the handle.

In capstone-rs, we expose cs_disasm() through the Capstone::disasm_*() family of methods.

But, the methods take &self parameter, which is not a mutable reference!

As the bindings author, it is my responsibility to expose the correct interface that allows the Rust type system to ensure safety.

It was an easy fix to have the methods take &mut self instead of &self.

Fix status🔗

Even after this second fix, cargo test would still fail sometimes.

I had look elsewhere...

Automatic Send/Sync🔗

At this stage, I was at a loss for what the problem could be.

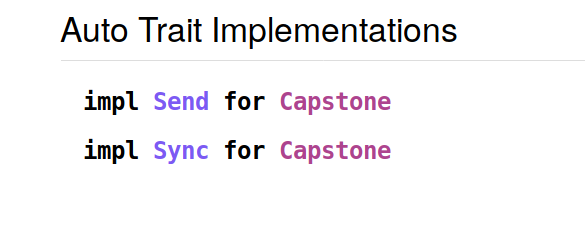

I considered the Send and Sync are "marker" traits:

Send: it is safe to send a type over a channelSync: it is safe to access a type from different threads

Perhaps some capstone-rs types were mistakenly identified as Send and/or Sync?

Maybe Cargo generated tests that ran in parallel and had race conditions?

Looking at the auto-generated cargo doc documentation:

I examined the Rust definition of the Capstone struct:

pub struct Capstone {

csh: csh,

mode: cs_mode,

endian: cs_mode,

syntax: cs_opt_value::Type,

extra_mode: cs_mode,

detail_enabled: bool,

raw_mode: cs_mode,

arch: Arch,

}

Since all of Capstone's fields are Send and Sync, the Rust compiler treated Capstone as Send and Sync.

But, the Capstone type should not be Send nor Sync.

The Capstone C library defines the handle type csh as an alias for size_t (equivalent to usize in Rust).

However, under the hood, csh is cast as a pointer to an opaque type.

How can we tell the Rust compiler stop stop inferring that the Capstone struct is Send/Sync?

According to the nomicon:

raw pointers are neither Send nor Sync (because they have no safety guards)

Since the C library already treated the csh field as a pointer, I just changed the csh type to a *mut c_void.

pub struct Capstone {

csh: *mut c_void,

/* Other fields */

}

Fix status🔗

The Capstone struct was no longer Send/Sync, but cargo test still failed.

I kept searching...

Second attempt at using tools: Valgrind Memcheck🔗

I decided to revisit tools to help find the bugs, specifically Valgrind (since it is the easy to use). I decided to use the Memcheck plugin (the default plugin) to look for non-threading bugs.

Using the system allocator🔗

The Valgrind framework uses dynamic binary instrumentation (DBI) to run instrumentation code.

Valgrind's Memcheck plugin works by tracking allocation calls (such as malloc()) and memory reads/writes.

Memcheck can catch bugs such as invalid memory reads/writes and memory leaks.

To avoid issues, I decided to use the system allocator (instead of the default jemalloc implementation).

To make the nightly toolchain optional, I added an alloc_system feature to toggle the system allocator.

I defined alloc_system feature in Cargo.toml:

[features]

default = []

alloc_system = []

Added conditional checks to enable the system allocator in src/lib.rs:

#[cfg(feature = "alloc_system")]

use std::alloc::System;

#[cfg(feature = "alloc_system")]

#[global_allocator]

static ALLOCATOR: System = System;

Note: Since implementing the alloc_system feature, Rust 1.28 stabilized global allocators.

Replicating the crash on Linux🔗

I ran the tests under the nightly toolchain on my Ubuntu Linux laptop

$ cargo +nightly test --features alloc_system

Finished dev [unoptimized + debuginfo] target(s) in 0.08s

Running target/debug/deps/capstone-7ce80c0a0e7a0946

running 41 tests

test arch::arm64::test::test_arm64shift ... ok

...

test test::test_arch_arm_detail ... ok

test test::test_arch_mips_detail ... FAILED

test test::test_arch_arm64_detail ... ok

...

test test::test_arch_systemz ... ok

test test::test_arch_x86_detail ... FAILED

test test::test_arch_arm ... ok

...

test test::test_x86_names ... ok

test test::test_instruction_group_ids ... FAILED

test test::test_arch_x86 ... ok

test test::test_x86_simple ... ok

test test::test_syntax ... ok

failures:

---- test::test_arch_mips_detail stdout ----

thread 'test::test_arch_mips_detail' panicked at 'index 218 out of range for slice of length 8', libcore/slice/mod.rs:1965:5

note: Run with `RUST_BACKTRACE=1` for a backtrace.

---- test::test_arch_x86_detail stdout ----

thread 'test::test_arch_x86_detail' panicked at 'index 39 out of range for slice of length 8', libcore/slice/mod.rs:1965:5

---- test::test_instruction_group_ids stdout ----

Insn { address: 4096, len: 1, bytes: [144], mnemonic: Some("nop"), op_str: Some("") }

Insn { address: 4097, len: 2, bytes: [116, 5], mnemonic: Some("je"), op_str: Some("0x1008") }

thread 'test::test_instruction_group_ids' panicked at 'Expected groups {InsnGroupId(1)} does NOT match computed insn groups {}', src/lib.rs:482:9

failures:

test::test_arch_mips_detail

test::test_arch_x86_detail

test::test_instruction_group_ids

test result: FAILED. 38 passed; 3 failed; 0 ignored; 0 measured; 0 filtered out

error: test failed, to rerun pass '--lib'

The tests actually failed!

This was the first time cargo test failed on my Linux development machine.

Different tests failed each time (like on Mac OS).

Sometimes, the test program did not complete and exited with SIGILL: illegal instruction.

Running under Valgrind🔗

To make tests more consistent and reduce errors, I passed --test-threads=1 and test_syntax().

At this point, I had the reduced test case to run under Valgrind.

I ran the test binary directly (instead of the cargo test ... invocation) to simplify debugging.

Since, cargo test spawns the test binary as a child process, I would have needed to trace the child process.

$ valgrind --track-origins=yes --leak-check=full \

target/debug/deps/capstone-7ce80c0a0e7a0946 \

--test-threads=1 test::test_syntax

==12465== Memcheck, a memory error detector

==12465== Copyright (C) 2002-2017, and GNU GPL'd, by Julian Seward et al.

==12465== Using Valgrind-3.13.0 and LibVEX; rerun with -h for copyright info

==12465== Command: target/debug/deps/capstone-7ce80c0a0e7a0946 --test-threads=1 test::test_syntax

==12465==

running 1 test

test test::test_syntax ... ==12465== Thread 2 test::test_synta:

==12465== Invalid read of size 1

==12465== at 0x17876C: capstone::instruction::InsnDetail::groups_count (instruction.rs:284)

==12465== by 0x1786E6: capstone::instruction::InsnDetail::groups (instruction.rs:279)

==12465== by 0x196497: capstone::test::test_instruction_group_helper (lib.rs:464)

==12465== by 0x197654: capstone::test::instructions_match_group (lib.rs:540)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

==12465== by 0x1CB699: capstone::__test::TESTS::{{closure}} (lib.rs:764)

==12465== by 0x18A91D: core::ops::function::FnOnce::call_once (function.rs:223)

==12465== by 0x1F0B8E: {{closure}} (lib.rs:1451)

==12465== by 0x1F0B8E: call_once<closure,()> (function.rs:223)

==12465== by 0x1F0B8E: <F as alloc::boxed::FnBox<A>>::call_box (boxed.rs:640)

==12465== by 0x2FF739: __rust_maybe_catch_panic (lib.rs:105)

==12465== by 0x20D2FD: try<(),std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>> (panicking.rs:289)

==12465== by 0x20D2FD: catch_unwind<std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>,()> (panic.rs:397)

==12465== by 0x20D2FD: {{closure}} (lib.rs:1406)

==12465== by 0x20D2FD: std::sys_common::backtrace::__rust_begin_short_backtrace (backtrace.rs:136)

==12465== by 0x212174: {{closure}}<closure,()> (mod.rs:409)

==12465== by 0x212174: call_once<(),closure> (panic.rs:313)

==12465== by 0x212174: _ZN3std9panicking3try7do_call17h8f30d126163b9f85E.llvm.2059438285251411021 (panicking.rs:310)

==12465== by 0x2FF739: __rust_maybe_catch_panic (lib.rs:105)

==12465== Address 0x5e2b19a is 42 bytes inside a block of size 1,528 free'd

==12465== at 0x4C30D3B: free (in /usr/lib/valgrind/vgpreload_memcheck-amd64-linux.so)

==12465== by 0x236234: cs_free (in capstone-rs/target/debug/deps/capstone-7ce80c0a0e7a0946)

==12465== by 0x1780E6: <capstone::instruction::Instructions<'a> as core::ops::drop::Drop>::drop (instruction.rs:66)

==12465== by 0x18B80D: core::ptr::drop_in_place (ptr.rs:59)

==12465== by 0x1971B3: capstone::test::instructions_match_group (lib.rs:524)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

==12465== by 0x1CB699: capstone::__test::TESTS::{{closure}} (lib.rs:764)

==12465== by 0x18A91D: core::ops::function::FnOnce::call_once (function.rs:223)

==12465== by 0x1F0B8E: {{closure}} (lib.rs:1451)

==12465== by 0x1F0B8E: call_once<closure,()> (function.rs:223)

==12465== by 0x1F0B8E: <F as alloc::boxed::FnBox<A>>::call_box (boxed.rs:640)

==12465== by 0x2FF739: __rust_maybe_catch_panic (lib.rs:105)

==12465== by 0x20D2FD: try<(),std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>> (panicking.rs:289)

==12465== by 0x20D2FD: catch_unwind<std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>,()> (panic.rs:397)

==12465== by 0x20D2FD: {{closure}} (lib.rs:1406)

==12465== by 0x20D2FD: std::sys_common::backtrace::__rust_begin_short_backtrace (backtrace.rs:136)

==12465== by 0x212174: {{closure}}<closure,()> (mod.rs:409)

==12465== by 0x212174: call_once<(),closure> (panic.rs:313)

==12465== by 0x212174: _ZN3std9panicking3try7do_call17h8f30d126163b9f85E.llvm.2059438285251411021 (panicking.rs:310)

==12465== Block was alloc'd at

==12465== at 0x4C2FB0F: malloc (in /usr/lib/valgrind/vgpreload_memcheck-amd64-linux.so)

==12465== by 0x235F9A: cs_disasm (in capstone-rs/target/debug/deps/capstone-7ce80c0a0e7a0946)

==12465== by 0x1ED9A2: capstone::capstone::Capstone::disasm (capstone.rs:212)

==12465== by 0x1ED8EA: capstone::capstone::Capstone::disasm_all (capstone.rs:188)

==12465== by 0x197107: capstone::test::instructions_match_group (lib.rs:521)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

==12465== by 0x1CB699: capstone::__test::TESTS::{{closure}} (lib.rs:764)

==12465== by 0x18A91D: core::ops::function::FnOnce::call_once (function.rs:223)

==12465== by 0x1F0B8E: {{closure}} (lib.rs:1451)

==12465== by 0x1F0B8E: call_once<closure,()> (function.rs:223)

==12465== by 0x1F0B8E: <F as alloc::boxed::FnBox<A>>::call_box (boxed.rs:640)

==12465== by 0x2FF739: __rust_maybe_catch_panic (lib.rs:105)

==12465== by 0x20D2FD: try<(),std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>> (panicking.rs:289)

==12465== by 0x20D2FD: catch_unwind<std::panic::AssertUnwindSafe<alloc::boxed::Box<FnBox<()>>>,()> (panic.rs:397)

==12465== by 0x20D2FD: {{closure}} (lib.rs:1406)

==12465== by 0x20D2FD: std::sys_common::backtrace::__rust_begin_short_backtrace (backtrace.rs:136)

==12465== by 0x212174: {{closure}}<closure,()> (mod.rs:409)

==12465== by 0x212174: call_once<(),closure> (panic.rs:313)

==12465== by 0x212174: _ZN3std9panicking3try7do_call17h8f30d126163b9f85E.llvm.2059438285251411021 (panicking.rs:310)

==12465==

...

ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 40 filtered out

==12465==

==12465== HEAP SUMMARY:

==12465== in use at exit: 32 bytes in 1 blocks

==12465== total heap usage: 775 allocs, 774 frees, 190,287 bytes allocated

==12465==

==12465== LEAK SUMMARY:

==12465== definitely lost: 0 bytes in 0 blocks

==12465== indirectly lost: 0 bytes in 0 blocks

==12465== possibly lost: 0 bytes in 0 blocks

==12465== still reachable: 32 bytes in 1 blocks

==12465== suppressed: 0 bytes in 0 blocks

==12465== Reachable blocks (those to which a pointer was found) are not shown.

==12465== To see them, rerun with: --leak-check=full --show-leak-kinds=all

==12465==

==12465== For counts of detected and suppressed errors, rerun with: -v

==12465== ERROR SUMMARY: 123 errors from 5 contexts (suppressed: 0 from 0)

We can see there that Valgrind's Memcheck plugin showed there was an error Invalid read of size 1.

Memcheck also gave us stack traces of where the memory was originally allocated and freed (since we passed --track-origins=yes/--leak-check=full, ).

Most of the stack traces relate to the test runner. The output relevant to capstone-rs is:

==12465== Invalid read of size 1

==12465== at 0x17876C: capstone::instruction::InsnDetail::groups_count (instruction.rs:284)

==12465== by 0x1786E6: capstone::instruction::InsnDetail::groups (instruction.rs:279)

==12465== by 0x196497: capstone::test::test_instruction_group_helper (lib.rs:464)

==12465== by 0x197654: capstone::test::instructions_match_group (lib.rs:540)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

==12465== Address 0x5e2b19a is 42 bytes inside a block of size 1,528 free'd

==12465== at 0x4C30D3B: free (in /usr/lib/valgrind/vgpreload_memcheck-amd64-linux.so)

==12465== by 0x236234: cs_free (in capstone-rs/target/debug/deps/capstone-7ce80c0a0e7a0946)

==12465== by 0x1780E6: <capstone::instruction::Instructions<'a> as core::ops::drop::Drop>::drop (instruction.rs:66)

==12465== by 0x18B80D: core::ptr::drop_in_place (ptr.rs:59)

==12465== by 0x1971B3: capstone::test::instructions_match_group (lib.rs:524)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

==12465== Block was alloc'd at

==12465== at 0x4C2FB0F: malloc (in /usr/lib/valgrind/vgpreload_memcheck-amd64-linux.so)

==12465== by 0x235F9A: cs_disasm (in capstone-rs/target/debug/deps/capstone-7ce80c0a0e7a0946)

==12465== by 0x1ED9A2: capstone::capstone::Capstone::disasm (capstone.rs:212)

==12465== by 0x1ED8EA: capstone::capstone::Capstone::disasm_all (capstone.rs:188)

==12465== by 0x197107: capstone::test::instructions_match_group (lib.rs:521)

==12465== by 0x17F3A8: capstone::test::test_syntax (lib.rs:847)

Notice that Rust functions (such as capstone::capstone::Capstone::disasm) and C functions (such as cs_disasm()) appeared in the output.

Valgrind does not care about the source langauge of functions.

Valgrind even correctly demangles the names.

Examining the Valgrind output🔗

Allocate and free (instructions_match_group() in lib.rs):

// cs: &mut Capstone

// insns_buf: Vec<u8>

521: let insns: Vec<Insn> = cs.disasm_all(&insns_buf, 0x1000) // Alloc

522: .expect("Failed to disassemble")

523: .iter()

524: .collect(); // Free

We can see here that cs.disasm_all() caused the allocation.

The resulting instructions are collected into a Vec<Insn>.

The underlying memory buffer with the instructions is immediately freed after the collect() (implicitly through the Drop trait).

Invalid read (test_instruction_group_helper() in lib.rs):

// cs: &Capstone

// insn: &Insn

461: let detail = cs.insn_detail(insn).expect("Unable to get detail");

...

// Invalid read

464: let instruction_group_ids: HashSet<_> = detail.groups().collect();

The insn_detail() method

of the Capstone struct

takes an Insn instance

to get the InsnDetail struct

that contains extra details about instructions.

For example, InsnDetail contains information about registers that are implicitly read/written and logical groups to which an instruction belongs.

At this point, I knew that memory was being freed too soon.

The InsnDetail struct relies on the memory allocated by cs_disasm() to remain.

Even though tests were passing on Linux with the default allocator, the tests actually read from freed memory. Rust's default allocator simply masked the issue.

The Final Fix🔗

The Capstone instance did not live long enough.

How could we fix the problem?

Answer:

Declaring a lifetime for the Capstone struct.

I added the lifetime 'cs to the Capstone struct definition:

pub struct Capstone<'cs> {

/* ... */

_marker: PhantomData<&'cs mut c_void>,

}

The new lifetime 'cs told the Rust type system that the Capstone struct logically contains a reference.

After implementing the change, the tests passed (on Mac OS and Linux).

cargo +nightly test --features alloc_system

Valgrind's Memcheck plugin also did not detect any invalid reads:

valgrind ./target/debug/deps/capstone-cf27dd2002993511

The test code that contained the allocation and free:

// cs: &mut Capstone

// insns_buf: Vec<u8>

let insns: Vec<Insn> = cs.disasm_all(&insns_buf, 0x1000) // Alloc

.expect("Failed to disassemble")

.iter()

.collect(); // Free

However, that code is not actually valid.

After adding adding the lifetime 'a to Capstone, I got the following compile error:

error[E0597]: borrowed value does not live long enough

--> src/lib.rs:564:29

|

564 | let insns: Vec<_> = cs.disasm_all(&insns_buf, 0x1000)

| _____________________________^

565 | | .expect("Failed to disassemble")

| |____________________________________________^ temporary value does not live long enough

566 | .iter()

567 | .collect();

| - temporary value dropped here while still borrowed

...

581 | }

| - temporary value needs to live until here

|

= note: consider using a `let` binding to increase its lifetime

The Instructions temporary variable returned by expect() does not live long enough.

The Instructions type:

- Returns an iterator over the instructions with the

iter()method - Frees the memory allocated by

disasm_all()in theDropimplementation.

That means that if the temporary Instructions is dropped early, then:

- The underlying buffer with the instructions will be dropped.

- The final

collect()'d vector will contain dangling references to instructions that have already been freed.

In order to fix the test code, I rewrote the snippet as:

// cs: &mut Capstone

// insns_buf: Vec<u8>

let insns_backing: Instructions = cs.disasm_all(&insns_buf, 0x1000)

.expect("Failed to disassemble");

let insns: Vec<_> = insns_backing.iter().collect();

The local variable insns_backing lives until the end of the function, so the insns variable does not contain dangling references.

Why does adding a lifetime work?🔗

If you have any ideas, please share them in the comments.

Update 2018-10-05:

Merged pull-request from earthengine that removes the extra lifetime on the Capstone structure.

The Capstone structure "owns" the handle allocation, so no lifetime needs to be exposed.

Conclusion🔗

This bug was originally classified as Mac OS-only. Even then, tests only failed sometimes.

There were several failed attempts to fix the bug, but I discovered (and fixed) other bugs.

For example, the Capstone struct was found to be Send/Sync.

I was also able to finaly track down the underlying bug with Valgrind. Even though Valgrind is usually used on code that is written in C/C++, Valgrind is a useful tool for programs written in Rust.

I learned several lessons in debugging this issue:

- Extensive tests help you find and fix bugs. capstone-rs contains over 2000 lines of tests. Without the Rust tests, the test failure on Mac OS would have never been reported.

- Use debugging tools. Valgrind's Memcheck plugin helped track down the bug. Safe Rust code prevents the types of bugs that the Valgrind plugins catch. But, unsafe Rust code and C code can have these types of bugs. Hence, tools that are used to debug C code are useful for debugging unsafe Rust code.

- You must be careful with not only the internal implementation, but the public interface to Rust FFI bindings. The public interfaces (including lifetimes) tells the Rust type system how long different items may live.

Thanks to Gulshan Singh for reviewing this post.

Update 2021-10-17: Updated links to recent Rust documentation